The AI boom is built on a series of bets that the logic of exponential growth will drive the future.

How it works: Exponential doesn’t just mean “number keeps going up.” It means “number keeps going up faster.”

The industry’s belief is that perpetually steepening curves will continue to…

- improve AI technology itself;

- accelerate human demand for its fruits; and

- supercharge society’s ability to quench AI’s thirst for chips, power and data.

Why it matters: The tech industry’s gamble is one that the rest of the world has now bought into by shoveling trillions of dollars onto the table.

Friction point: AI makers expect AI itself to reinforce its own progress in a virtuous cycle. Skeptics fear AI will hit a wall and trigger a global financial meltdown.

Exponential curves, with their steadily repeated doublings, can proceed forever in the abstract — but in the real world, every growth plot eventually hits some kind of limit.

- Moore’s Law, the exponential principle of semiconductor improvement that governed the rise of personal computing, eventually hit the physical limitations of energy dissipation at the atomic level.

- Metcalfe’s Law, another exponential concept of network value that drove the rise of the internet and social media, hit the limits of human population and imperfect human institutions.

AI can’t escape reaching its own limits unless it somehow proves to be different from every other revolutionary human invention that preceded it.

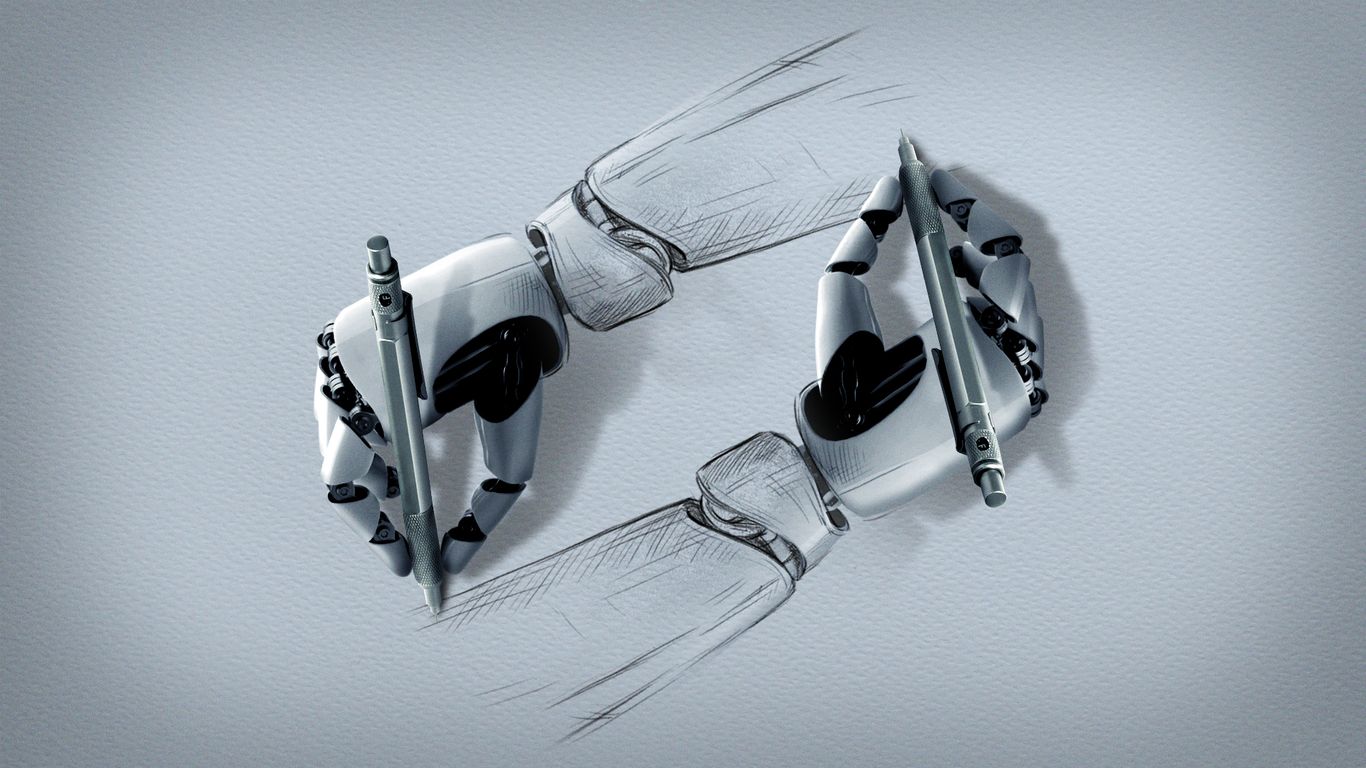

- AI optimists believe the key to that difference lies in the scenario where AI starts to build itself.

- They envision a “take-off” moment when already-rapid advances in AI capacity and reliability start being driven by AI models rewriting themselves in a feedback loop.

- The power of this kind of recursive self-improvement drives every scenario of AI utopia or doomsday.

Yes, but: Exponential equations are elegant closed systems that produce reliably reproducible results. Our world is messily interdependent and our creations fail in sudden, unpredictable ways.

- “Everything is deeply intertwingled,” tech visionary Ted Nelson famously noted a half-century ago. “People keep pretending they can make things hierarchical, categorizable and sequential when they can’t.”

AI’s machine-learning models are themselves neural networks that represent information in an “intertwingled” way.

- But their most successful makers — at OpenAI, Google and Anthropic — have all converged on a brute-force, growth-driven route to the future guided by “scaling laws” that map model size to improved performance.

- The leaders of these companies believe that if we keep building the same kind of models bigger and bigger, they will keep getting better — until one day they cross the mysterious “take-off” line into self-improvement.

The other side: History suggests that AI’s growth in power and usage will eventually level off because, in the real world, every exponential curve eventually flattens.

The open question is how far the AI curve can go — how many doublings we can put the technology through — before it reaches a limit.

- The limit could be environmental, as AI data center demand for power and water exceeds what nations can support and/or climate disasters trigger revulsion at the technology’s wastefulness.

- The limit could be financial, if external factors (war, pandemic, political instability) or internal problems (revenue disappointments, technical logjams) cause markets to flip from manic to depressive.

- Or the limit could just be a broader disillusionment with AI itself if its hyperbolic promises — personalized PhD tutors! Aging reversed! Universal abundance, and Mars, too! — fail to pan out.

Our thought bubble: Silicon Valley is capitalist to the core, and growth is the ideology of capitalism.

- But growth without an equitable distribution plan can be a recipe for corruption and discontent. And growth without an ethical compass is a blueprint for exploitation and chaos.

- “Growth for the sake of growth is the ideology of the cancer cell,” as the environmentalist Edward Abbey once said.

The AI industry has learned the “bitter lesson” that it should stop trying to teach machines a structured set of facts and instead just build ever-bigger machines that know how to learn.

- But if it continues to focus on growth for its own sake and fails to steer its growth in a purposeful way, it could have more bitter lessons in store.

The bottom line: The trillion-dollar choice AI asks each of us to make is, are we willing to believe that “this time is different” — or do we stick with “what goes up must come down.”

Disclaimer: This news has been automatically collected from the source link above. Our website does not create, edit, or publish the content. All information, statements, and opinions expressed belong solely to the original publisher. We are not responsible or liable for the accuracy, reliability, or completeness of any news, nor for any statements, views, or claims made in the content. All rights remain with the respective source.